Artificial intelligence is rapidly transforming how organizations operate, make decisions, and deliver value to customers. From financial services approving loans to healthcare providers recommending treatments, AI systems increasingly influence outcomes that affect people’s lives. Yet adoption is not solely a matter of technical capability. Trust remains the defining factor. For businesses to rely on AI in critical decision-making, they must ensure that systems are transparent and understandable. This is where explainability becomes central.

Explainability is more than a compliance requirement. It is the foundation of the architecture of trust that organizations must build to fully harness AI while protecting stakeholders and reputations.

Why Explainability Matters

Traditional analytics and business intelligence systems have long provided clear, traceable logic. Decision-makers could see the inputs and understand the reasoning. AI models, particularly deep learning systems, often operate as black boxes. They deliver highly accurate predictions but with little visibility into how those predictions are formed.

For executives, regulators, and customers, this opacity raises legitimate concerns:

- Accountability: If a model makes a mistake, who is responsible and how can the error be corrected?

- Fairness: Are models introducing or amplifying bias that disadvantages certain groups?

- Regulatory compliance: Many industries face legal requirements to explain automated decisions, particularly in finance, healthcare, and insurance.

- Trust: Customers are less likely to adopt or accept outcomes they cannot understand.

Without explainability, even the most accurate AI system risks rejection. Trust is built when stakeholders know not just the “what” but also the “why” behind each decision.

The Core Principles of Explainable AI

Embedding explainability into AI systems requires more than adding reports after the fact. It involves design principles that prioritize clarity and transparency from the start. The most effective approaches reflect these principles:

- Transparency

Models should provide visibility into their structure, data sources, and reasoning processes. This does not mean exposing proprietary algorithms but rather offering clear descriptions of how outputs are generated.

- Interpretability

Explanations must be understandable by the intended audience. A technical data scientist may require detailed feature attributions, while a business executive may only need a high-level summary.

- Consistency

Explanations should not vary unpredictably. Consistent outputs build user confidence that the system behaves reliably.

- Actionability

Stakeholders must be able to use explanations to make better decisions. For example, a bank officer should understand why a loan application was flagged so they can take corrective steps if necessary.

By adhering to these principles, organizations design AI systems that are both powerful and trustworthy.

Tools and Techniques for Explainability

Several tools and methods have emerged to make AI more transparent. Each offers different strengths depending on the complexity of the model and the needs of the stakeholders:

- Feature attribution methods such as SHAP or LIME highlight which variables most influenced a prediction.

- Model simplification techniques distill complex models into more interpretable forms without sacrificing too much accuracy.

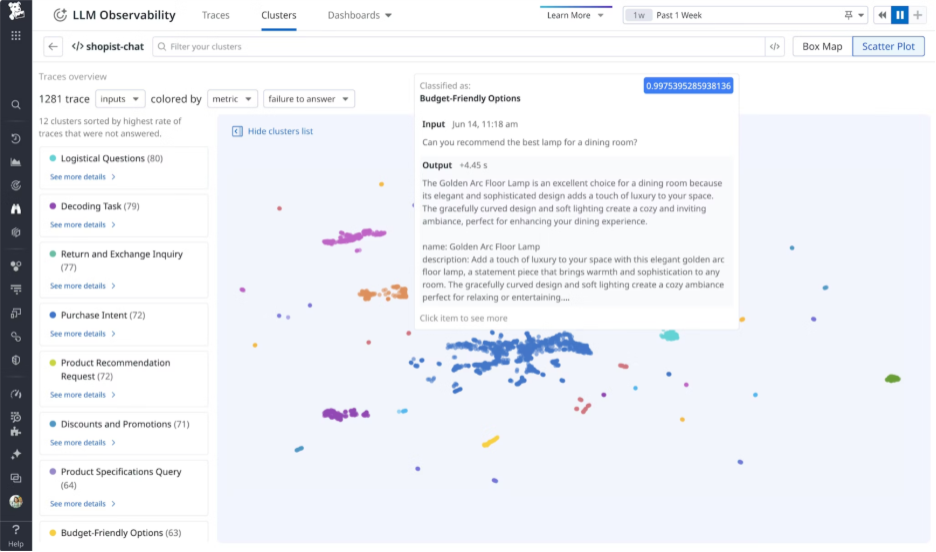

- Visualization tools translate abstract model behavior into intuitive charts or graphs. A great example if this is the Datadog’s LLM Observability module.

- Rule-based overlays combine traditional if-then logic with AI outputs to make decision processes clearer.

By using a combination of these approaches, businesses can tailor explanations to different audiences while maintaining rigor.

Business Impact of Explainable AI

Explainability does not just satisfy regulators. It creates tangible business benefits:

- Enhanced decision-making: Clearer insights allow leaders to act with greater confidence.

- Reduced risk: Transparent systems make it easier to detect and correct errors before they cause harm.

- Customer trust and loyalty: Businesses that can explain outcomes build stronger relationships with clients who feel treated fairly.

- Innovation readiness: Organizations that prioritize explainability are better positioned to experiment with new AI applications without fear of uncontrolled consequences.

In short, explainability turns AI from a risky black box into a dependable partner for business growth.

Building a Culture of Transparent AI

Explainability is not solely a technical problem. It is also cultural. Businesses must create environments where clarity and accountability are valued as much as performance metrics. This means:

- Training employees to understand AI concepts at a practical level.

- Establishing governance structures that require explanations for all automated decisions.

- Rewarding teams not just for accuracy but also for the clarity of their models.

By embedding transparency into culture, organizations reinforce the trust that technology alone cannot guarantee.

Trust as a Strategic Asset

Trust is not a byproduct of AI adoption; it is the outcome of deliberate design and governance. Organizations that prioritize explainability create systems that inspire confidence, reduce risk, and enable long-term innovation. As AI becomes more deeply woven into business decisions, those who build the architecture of trust will stand apart.

In a marketplace where technology is accessible to many, trust becomes the true differentiator. Companies that embed explainability at every stage will not only meet compliance requirements but also earn the confidence of customers, partners, and regulators. Trust, once established, becomes a strategic asset that accelerates growth and ensures that AI-driven decisions lead to sustainable success.